Appearance

DLSS 4: Your GPU’s Midlife Crisis Fix

by Pranav Rajesh, on 19/03/2025

If you’re wondering why I chose that name as the title for this article, it’s because if there’s one piece of software that can turn back time, it’s DLSS (Deep Learning Super Sampling).

JK—it can’t do all that (although I wish). But what it can do is pretty mind-blowing. DLSS basically takes your old GPU—the one that’s been running like a potato and could barely stream movies in 1080p—and suddenly makes it spit out high-quality frames that look like they were natively rendered in 4K.

As of today, DLSS has pretty much become the backbone of the gaming industry. Why? Because it drastically reduces the computing power needed to render high-quality frames. Instead of brute-forcing every pixel, DLSS uses deep learning to upscale lower-resolution images and makes them look like they were rendered in crisp, buttery-smooth 4K glory.

So how exactly does the latest version, DLSS 4, take things even further? How does it level up your gaming experience and make use of deep learning voodoo while doing so?

Find out in the next episode of Dragon Ball Z!

(But seriously—stick around. I promise this is going to be informative and fun. Trust me. :) )

I will be referring to the changes made by NVIDIA in the RTX 50 series GPUs as that was when the DLSS 4 was introduced.

Table of Contents

The Evolution of DLSS: CNNs vs Transformers

When NVIDIA introduced DLSS back in 2019, they heavily relied on CNNs (Convolutional Neural Networks). In simpler terms, think of CNNs as algorithms that train themselves by tweaking weights at each neuron, step by step, until they finally understand what’s going on. Like me staring at blurry lecture notes until it all suddenly makes sense.

DLSS 1.0 and 2.0 worked by using CNNs to upsample a lower-resolution image and predict what the high-res version should look like. Think of it like enhancing a blurry photo—but instead of you tapping “sharpen” on your phone, it's an AI trained on millions of high-res game images doing the hard work.

The CNN-based approach was great for its time. DLSS 2.0, in particular, was a massive leap forward. It used temporal data (info from previous frames), motion vectors, and depth buffers to reconstruct sharp images from low-res inputs. But CNNs had limitations.

So what was wrong with CNNs?

A better question to ask is:

What do Transformers have that CNNs didn’t?

CNNs are really good at recognizing local patterns—edges, textures, and small details.

But they weren’t great at recognizing global patterns.

For example, a CNN would do a decent job rendering a human head… but it might totally mess up how that head looks when it’s moving in front of a wall. You’d sometimes get weird artifacts, like a gradient tail trailing behind the head as it moves.

Kind of like The Flash leaving a red streak behind when he’s running at the speed of light. (Great show, by the way—10/10 recommend the first couple of seasons.)

As a result, CNN-based DLSS sometimes produced blurry images, and it struggled with unwanted effects like ghosting or smearing when objects moved quickly across the screen. It was like the AI was guessing where things should be, but wasn’t entirely sure, and things got messy.

Enter Transformer Models: DLSS 4’s Biggest Upgrade

With DLSS 4, NVIDIA shifted to a Transformer-based AI model, similar to what powers GPT and other large language models.

But instead of reading text, these Transformers process images, motion vectors, optical flow, and depth data across multiple frames.

So why Transformers?

Transformers are ridiculously good at handling long-range dependencies.

→ In simple terms: they can understand both the small details and the big picture at the same time.

They can also model temporal data far better than CNNs. This means they have a much stronger grasp on how objects move across frames, leading to:

✅ Fewer artifacts like ghosting or smearing

✅ Sharper images, even when things are moving quickly

✅ A more stable, flicker-free image (because they "remember" past frames better)

Below is the comparison between the same scene generated by CNNs(left) and by transformers(right)-

Oh, so am I gonna bore you with some math and big fancy words to sound smart?

Fact check: Maybe just a little, but I promise to keep it spicy.

Let’s dive into how Transformers work under the hood, with a focus on how they apply to DLSS 4—especially in processing frames for upscaling and interpolation. I’ll keep it clear, with some math and notations, but still digestible. The difference in Spatial and Temporal understanding is something that pushes the Transformers to be better than the CNNs.

Spatial Understanding

- Transformers analyze the entire frame holistically, not just in small patches.

- This helps maintain the consistency of details across the entire scene.

Temporal Understanding

- They track objects over time (across multiple frames), improving motion stability.

- Motion vectors + Optical Flow + Transformer magic = super smooth image reconstruction.

Ok now getting into the tough parts-

The core concept of the transformer would be the attention mechanism.

The magic sauce in Transformers is the Attention Mechanism—specifically Self-Attention.

Attention lets the model weigh the importance of different parts of the input when making predictions.

In DLSS 4, think of it this way:

Every pixel (or feature) in a frame can pay attention to other pixels/features in other parts of the frame or in previous frames, to make better decisions about reconstruction or frame generation.

Breaking It Down (Mathematically)

1. Inputs (Tokens)

- For images or video, the input isn’t words (like in GPT), but patches of pixels or feature vectors from CNN-like encoders.

- Each input is converted into a vector representation, called an embedding.

For an image:

Split into patches → Flatten → Project into embeddings.

For DLSS, it’s more likely features extracted from previous frames, motion vectors, optical flow, etc.

2. Query, Key, Value (Q, K, V) Vectors

Each input embedding is transformed into three vectors:

- Q (Query)

- K (Key)

- V (Value)

This is done via learned weight matrices:

where,

is the input embedding (features from image patches) are weight matrices learned during training.

3. Attention Score Calculation

For each Query, the model calculates how much attention to pay to every Key in the sequence (spatial and temporal):

is the dimension of the key vectors, used to normalize the scores.

This gives you a score matrix, which represents how much each token (patch/feature) should attend to every other one.

4. Softmax Normalization

The attention scores are converted into probabilities (attention weights) using Softmax:

This ensures they add up to 1 and can be interpreted as weights.

5. Weighted Sum of Values

Each Query uses the attention weights to sum up the Value vectors (which contain the actual data):

This gives a new representation of each input, now informed by other regions in the frame and even other frames.

Multi-Head Self-Attention

Transformers don’t just run one attention calculation.

They run multiple attention heads in parallel, each focusing on different types of relationships.

- Each head has its own Q/K/V weights and learns different relationships.

- The outputs are concatenated and linearly transformed by

.

Temporal and Spatial Understanding

In DLSS 4:

- Spatial Attention helps understand context within the current frame.

- Temporal Attention helps connect information between frames, using motion vectors and optical flow.

This lets DLSS predict what the next frame should look like (frame interpolation) or reconstruct a high-res image (super resolution).

Position Encoding

Because Transformers have no sense of order by default (unlike CNNs with spatial locality), DLSS likely uses Positional Encoding:

- Adds information about pixel/patch positions in both space (x, y) and time (frames t-1, t, t+1).

Common encoding functions:

This gives the model a sense of where a patch is, when it exists, and how it moves.

The Feedforward Network (FFN)

After attention, the data passes through a Feedforward Neural Network (FFN):

- Adds non-linearity and transformation to better model complex features.

- Typically includes Layer Norm and Residual Connections to stabilize learning.

How This All Comes Together in DLSS 4

- Input

- Previous frames, motion vectors, optical flow, depth buffers.

- Transformer Encoder

- Multi-head self-attention extracts spatial and temporal features.

- Prediction

- Generates high-res frames from lower-res inputs.

- Interpolates new frames for smoother motion (frame generation).

- Output

- Upscaled images with higher quality and AI-generated frames to increase frame rates.

Simple Analogy:

Think of CNNs like someone examining each puzzle piece in isolation.

Transformers are like someone hovering above the entire puzzle, seeing how all the pieces fit together at once—and over time, they track how the pieces are shifting and changing!

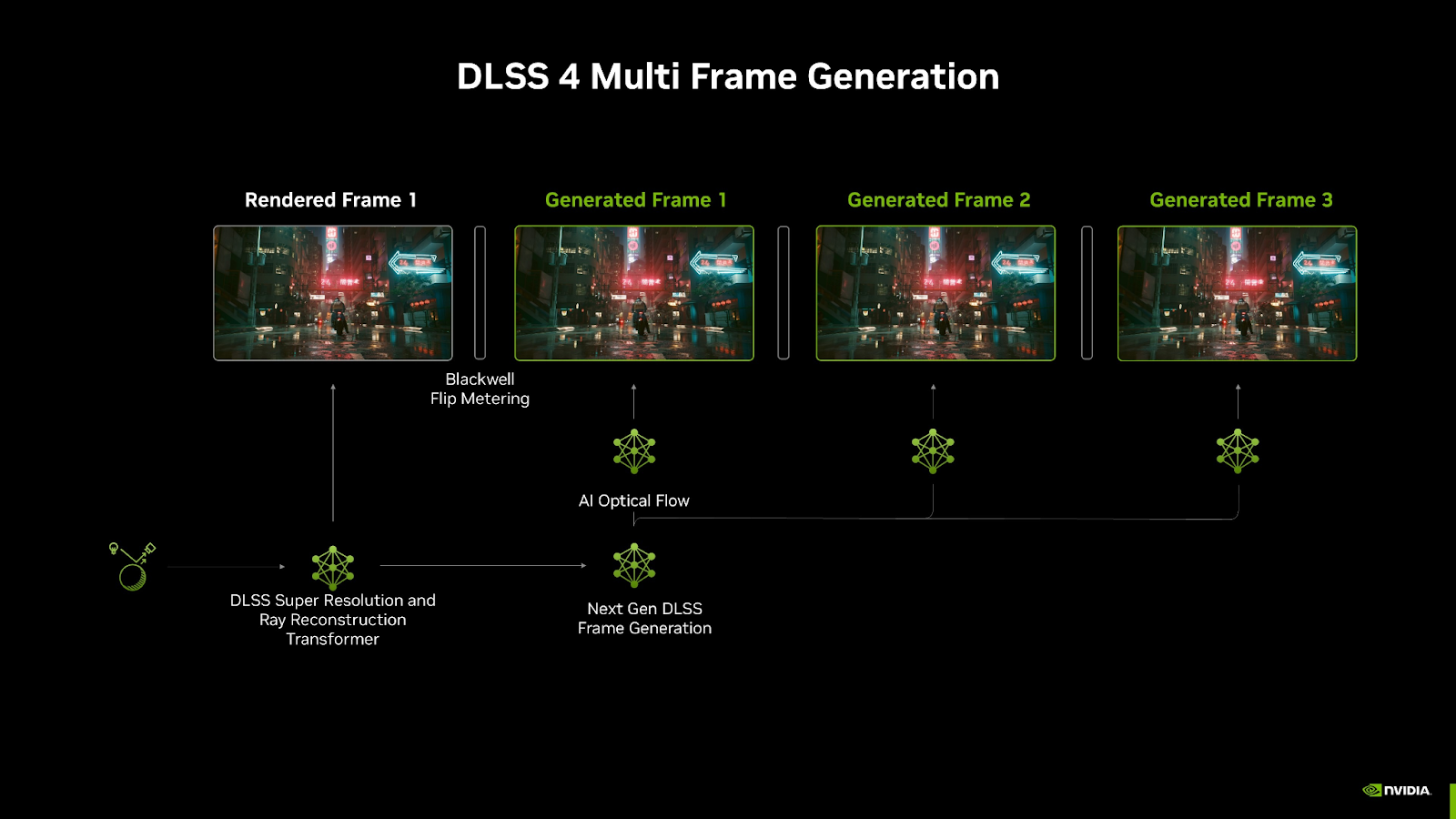

Frame Generation & How AI Makes More Frames From Less

If you’ve ever wished your game could go from choppy to buttery-smooth without upgrading your graphics card (or selling a kidney for a new one), DLSS Frame Generation is what makes that dream kinda possible.

The tech takes a normal game running at 60 FPS and conjures up extra frames out of thin air—well, not exactly thin air, but close enough.

What is Frame Generation?

In simple terms, Frame Generation is NVIDIA’s way of boosting your frame rates by generating entirely new frames between the ones your GPU renders.

Imagine your GPU draws Frame 1 and Frame 2. Normally, it just shows them one after the other. But with DLSS Frame Generation, NVIDIA’s AI steps in and creates a brand new Frame 1.5 to slot between them.

Result?

More frames. Smoother gameplay. Less input lag. And your GPU doesn’t even have to break a sweat (well, maybe just a little).

How Does It Actually Work? (The Nerdy But Fun Version)

DLSS 3 introduced Frame Generation, but in DLSS 4, they’ve made it even smarter. Here’s how the magic happens:

1. Motion Vectors & Optical Flow: The AI’s GPS for Motion Tracking

Motion vectors are like tiny arrows showing the AI where every pixel moves from one frame to the next—kind of like a “you are here” map for pixels.

- Frame 1 says: “This pixel was here.”

- Frame 2 says: “Now it’s here.”

- The AI figures out: “Ah! If it moved that much in that time, I can guess where it was halfway.”

NVIDIA takes this further with the Optical Flow Accelerator (OFA)—a dedicated hardware block on RTX GPUs that precisely measures pixel movement. Unlike simple motion vectors, optical flow calculates how fast and in which direction everything is moving, even accounting for tricky elements like shadows, reflections, and particle effects (looking at you, explosions).

Think of it like watching a flipbook in slow motion and tracing every moving object. By combining motion vectors with optical flow, AI can generate new frames with greater accuracy, even when movements don’t follow strict patterns

2. The Transformer AI Makes New Frames

The AI takes:

✅ Frame 1

✅ Frame 2

✅ Motion Vectors

✅ Optical Flow data

And then it goes:

“Cool, I’m gonna invent Frame 1.5 now.”

This is where Transformers come into play. They process all this spatial (what things look like) and temporal (when things happen) data, analyze relationships between pixels across frames, and then generate an entirely new frame that looks like it belongs right there.

3. The Math Magic (Quick and Painless, Promise)

At the heart of the Transformer is the self-attention mechanism.

This is where it decides what pixels should pay attention to what other pixels, across both space (on the same frame) and time (between frames).

Mathematically, it’s doing something like this:

Don’t worry, here’s the translation:

= the “question” (what are we focusing on?) = the “key” (where’s the relevant info?) = the “value” (what data do we actually need?) = a fancy way to make sure everything adds up nicely and makes sense.

The Transformer uses this to blend information from different parts of the image and across different frames to create a realistic-looking new frame.

No blur, no weird artifacts—just smoothness.

But Wait, Doesn’t This Cause Input Lag?

Good question! Adding extra frames that the AI invents can introduce latency because your inputs (like moving your mouse or hitting a key) are reflected in the next frame that the GPU renders—not the interpolated ones.

But NVIDIA tackles this with Reflex, a technology that minimizes input lag by syncing frame generation with your actual inputs.

So when you flick your wrist for a quick headshot in Valorant (or miss it, no judgment. side eye), you’re not being slowed down by DLSS.

Latency & Reflex: Keeping Things Snappy

So, you’ve got all these shiny AI-generated frames thanks to DLSS 4, and your game looks smoother than butter on a hot pancake. But what’s the point of silky smooth frames if every time you click, your bullet leaves the barrel a century later?

That’s input lag, and it can be the difference between clutching the win or rage-quitting to play Minesweeper.

But fear not—NVIDIA Reflex is here to save your reaction times (and your K/D ratio).

What is Latency, Anyway?

Latency (aka system latency) is the time it takes for your action—say clicking your mouse or tapping a key—to show up on your screen.

Imagine you see an enemy pop up, you click to shoot, but by the time the screen updates, they’ve already moved (or you’re already dead).

- The lower your latency, the faster the game reacts to you.

- The higher your latency, the more you feel like you’re gaming underwater and makes you want to punch the monitor.

Why Frame Generation Can Add Latency

With DLSS Frame Generation, you’re inserting extra frames between the ones your GPU is actually rendering. While this boosts frame rates and makes animations smoother, it also decouples what you see from what’s happening in real time.

Those AI frames are interpolated—they’re guesses based on previous frames and motion data. This can introduce a delay between when you move your mouse and when that movement shows up on screen.

Enter: NVIDIA Reflex.

NVIDIA Reflex: Like Bullet Time, But Faster

Reflex cuts system latency by reducing the time it takes for your input to travel through the entire pipeline—from mouse click, to CPU, to GPU, to monitor.

Here’s how it works:

Low Latency Mode (LLM):

Reflex tells your CPU and GPU to stop queuing up frames. Instead of preparing a long line of frames waiting to be rendered (which increases latency), Reflex forces the system to work just-in-time, so the newest frame reflects your latest input.Tighter Synchronization:

Reflex makes sure that the game engine and your GPU are perfectly in sync. If the GPU is busy generating AI frames, Reflex ensures your input commands get priority and don’t get stuck behind in a queue.Input-to-Display Optimization:

Reflex cuts down the render queue at every stage.

→ Your mouse click → Game engine → GPU → AI-generated frame → Monitor

Reflex keeps all these steps tight, trimming the fat, so your reaction time stays snappy.

The Nerdy (But Fun) Math of Latency

Let’s break it down🕺:

- Total System Latency = Input Latency + Processing Latency + Display Latency

Reflex minimizes processing latency by:

✅ Reducing the CPU render queue

✅ Prioritizing rendering the latest user input

✅ Reducing how long frames are held before being shown

The result? Less delay between click and bang.

Reflex in Competitive Games

Reflex isn’t just a fancy name. In competitive games like Valorant, CS2, and Apex Legends, every millisecond counts.

- Without Reflex, latency can be 30ms–60ms (or more).

- With Reflex, that can drop to 10ms–20ms—huge in the esports world, where headshots are measured in pixels and milliseconds.

And yes, Reflex works even when DLSS Frame Generation is on, which is crucial because AI-generated frames could otherwise slow things down.

Reflex + DLSS = High FPS + Low Latency

You get:

🎮 Higher frame rates with Frame Generation

⚡ Lower latency with Reflex

✅ The best of both worlds—smooth visuals without turning your inputs into a slideshow(Unlike Blue Lock LMAO).

Hardware Limitations & Requirements

“Can my potato run this?”—a question as old as time (or at least as old as Crysis). But when it comes to DLSS 4 and all its AI wizardry, we need to have a real talk about hardware requirements—and whether your setup is ready to handle it.

DLSS 4 Needs Some Serious Muscle

Let’s get one thing out of the way: DLSS 4 isn’t just a software update you can slap onto your grandpa’s old GTX 960 and expect 4K magic. This tech relies heavily on AI acceleration, which means dedicated hardware—specifically, NVIDIA’s 4th-generation Tensor Cores and Optical Flow Accelerators—are required.

DLSS 4 Runs on RTX 40 Series GPUs (and Up)

If you want to unlock DLSS 4 (and Frame Generation), you’ll need an RTX 40-series card, aka the Ada Lovelace architecture.

- ✅ RTX 4060 and above support DLSS 3.5 and 4 (depending on implementation).

- ❌ Sorry, RTX 30-series and earlier cards don’t make the cut for Frame Generation or DLSS 4.

Why? For money LMAO. While RTX 30-series GPUs do have an Optical Flow Accelerator (OFA), it's an older, less efficient version. Technically, they could generate frames and upscale them to some extent. However, NVIDIA has locked them out of Frame Generation, likely for market segmentation rather than a strict hardware limitation. That said, RTX 30-series cards can still use DLSS Super Resolution (DLSS 2 & 3.5) just fine—they just miss out on DLSS 4’s Frame Generation perks.

What Makes RTX 40-Series Special?

- 4th-Gen Tensor Cores: These power the AI super sampling magic, boosting performance without sacrificing image quality.

- 3rd-Gen Optical Flow Accelerators (OFA): This piece predicts motion between frames far more accurately, which is key for Frame Generation.

- Shader Execution Reordering (SER): A fancy trick that makes rendering more efficient, improving ray tracing and helping DLSS run smoother.

These hardware bits work together to process super-resolution, frame interpolation, and latency reduction in real-time. Without them, DLSS 4 would be like trying to run Cyberpunk 2077 on a calculator.

CPU & RAM: Are They Important?

Absolutely—but not as much as they used to be.

With DLSS Frame Generation, you’re often GPU-bound instead of CPU-bound, because the GPU is doing most of the heavy lifting (and generating frames itself).

✅ Good news: If your CPU is getting a little old, DLSS 4 and Frame Generation can help you hit high frame rates anyway.

🚫 Bad news: If your CPU can’t keep up with the game logic (AI, physics, etc.), you’ll still bottleneck your system.

For RAM, 16GB is still the sweet spot for most games, but more is always better—especially for big open-world titles or heavy multitasking.

Monitor Requirements: More Frames = Better Refresh Rates

What’s the point of DLSS Frame Generation giving you 200 FPS if your monitor’s stuck at 60Hz?

- High refresh rate monitors (120Hz, 144Hz, or 240Hz+) are key to taking full advantage of the extra frames.

- G-Sync Compatible monitors can also help eliminate screen tearing and stuttering, syncing frame delivery with your display’s refresh rate.

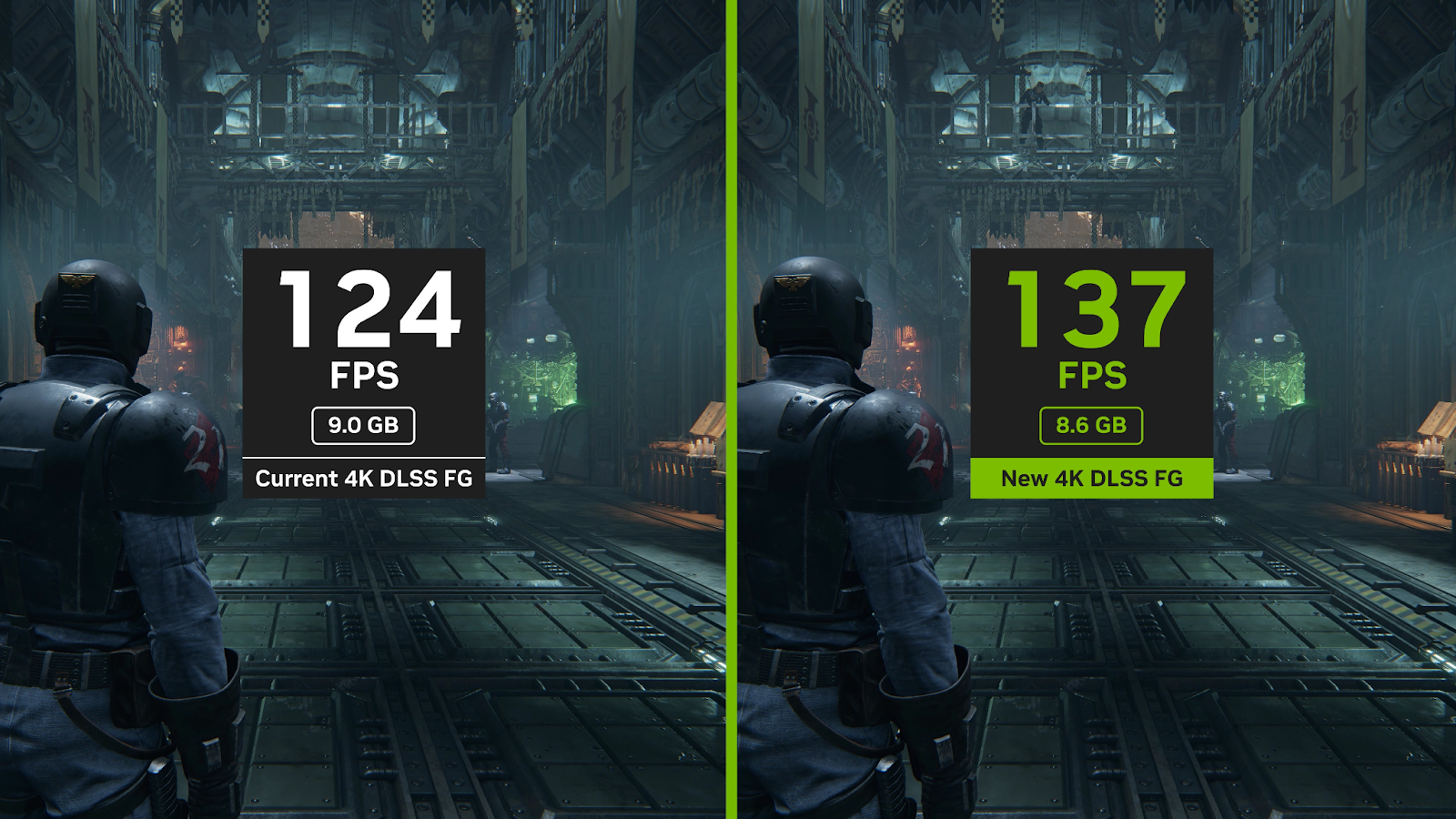

Quality Improvements Over DLSS 3

If It Ain’t Broke… NVIDIA Fixes It Anyway

That’s basically the philosophy behind DLSS 4. DLSS 3 was already doing some wild sci-fi stuff—pulling extra frames out of thin air, making our GPUs look like overachievers. But DLSS 4? It takes all that wizardry, puts on a cape, and flies circles around it. Let’s break it down.

Sharper Image Quality (Bye-Bye Blurryville)

Remember in DLSS 3 when fast-paced scenes sometimes looked like you were peeking through a smudged window? Yeah, not great. There was ghosting, smearing, shimmering—basically all the stuff you don’t want when you’re gunning down enemies at 200 mph.

DLSS 4 swaps out those greasy visuals for something a lot cleaner. Thanks to its Transformer-powered brain, it handles fine details like a pro.

👉 Result: Crisp textures, sharper edges, and fewer “what the hell was that?!” moments, even when things are blowing up all around you.

Better Motion Clarity (Because Ghosts Should Stay in Horror Games)

DLSS 3 sometimes left little ghost trails behind fast-moving objects. Like your character had a spirit twin tagging along. Fun? Maybe. Immersive? Not really.

With DLSS 4, the AI gets motion prediction right. It knows exactly where things are going, so objects stay clean and focused.

👉 You move, you aim, you flick—and no spectral nonsense follows you around.

Smarter Frame Generation (The AI Grew a Bigger Brain)

DLSS 3’s Frame Generation was decent. It guessed what an in-between frame should look like by using the last two frames as references. But sometimes, especially in chaotic scenes, it looked like it had a panic attack.

Enter DLSS 4: with Transformers handling long-range dependencies, it’s not just guessing—it’s thinking ahead.

👉 You get more accurate frames, smoother animations, and less of that “wait, what just happened?” feeling when stuff gets crazy.

Reduced Latency (Because Timing Is Everything)

The downside of extra frames in DLSS 3? A bit of added latency. It was like getting an awesome sports car but realizing the steering was a tiny bit delayed.

DLSS 4 says “nah” to that. With tighter integration with NVIDIA Reflex, latency gets sliced down to a minimum.

👉 Faster response times = better aim(debatable considering user skill issue), snappier movement, and maybe, just maybe, a few more headshots. (Reflex + Frame Generation = your inner Aceu unlocked. Man, I still miss that tap-strafing beast.)

Better Ray Tracing (RTX ON Without Melting Your Rig)

Ray tracing used to be that feature you wanted to turn on but didn’t—unless you liked single-digit FPS or had a PC cooled by liquid nitrogen.

DLSS 4 makes ray tracing way more doable. Higher-quality upscaling and frame generation mean you can finally crank those RTX sliders without hearing your GPU beg for mercy.

👉 Smooth gameplay and fancy reflections? Yes, please.

Cleaner UI and HUD (Because Blurry Crosshairs Are a Crime)

One of DLSS 3’s quirks was that UI elements—like health bars and crosshairs—sometimes looked a little fuzzy, like your character needed glasses.

DLSS 4’s smarter AI makes sure those elements stay sharp and clear, no matter how hectic things get on screen.

👉 Now you can actually see what you’re aiming at.

DLSS 4 = DLSS 3.5 with Extra Sauce

DLSS 3.5 already threw Ray Reconstruction into the mix, swapping out old-school denoisers for AI-driven magic. DLSS 4 builds on that and cranks it up:

✅ Cleaner lighting and reflections.

✅ Sharper visuals without weird flickers or shadow wiggles.

✅ Overall stability, so your game looks less like a rave and more like a polished experience.

In short? DLSS 4 doesn’t reinvent the wheel—it straps a jet engine to it and calls it a day.

DLAA & Other Features

If DLSS is the cheat code for better performance, DLAA (Deep Learning Anti-Aliasing) is its classy cousin that doesn’t care about FPS but loves making things look gorgeous.

DLAA: Pure Quality, No Upscaling Shenanigans

- DLAA skips the upscaling party entirely. It runs at native resolution but applies NVIDIA’s deep learning magic to deliver insanely sharp anti-aliasing.

- Think super clean edges, no jaggies, and no shimmering, especially in fine details like fences, wires, or tree leaves.

- Ideal if you’re already getting high FPS and want visuals that’ll make you cry tears of joy. (Or show off your rig’s muscles.)

Basically, DLAA = Native resolution + AI-powered anti-aliasing. No upscaling. Just pure eye candy.

Ray Reconstruction (From DLSS 3.5, Built Into DLSS 4)

- Swaps out traditional denoisers with AI-driven ray reconstruction for ray-traced lighting, shadows, and reflections.

- Results? Cleaner, sharper, and more realistic ray-traced effects with fewer visual artifacts.

- Especially noticeable in games like Cyberpunk 2077 and Alan Wake 2 where ray tracing is dialed up to 11.

NVIDIA Reflex Integration

- Reduces system latency, making games snappier and more responsive.

- Critical when you’re using Frame Generation, so you don’t feel like you’re aiming through molasses.

- DLSS 4 and Reflex are BFFs—giving you both smooth frames and fast reactions.

DLSS Ultra Performance Mode (For the 8K Dreamers)

- DLSS has different presets—Quality, Balanced, Performance—and now Ultra Performance.

- Lets you run games at crazy high resolutions like 8K by rendering at a super low internal resolution and upscaling.

- You won’t believe your potato could do this.

The Future of DLSS / Final Thoughts

So, where does DLSS go from here? Well, if history (and NVIDIA’s love for making our wallets cry) tells us anything, DLSS is only going to get smarter, faster, and somehow make our aging GPUs feel young again—like a digital anti-aging cream.

What’s Next?

- Expect even better AI models, probably something like “DLSS 5: Rise of the Frames.”

- AI models so much better that your mouse moves on its own to get you headshots that you weren’t going to get anyway.(JK)

- Smarter frame prediction, cleaner ray tracing, and maybe—just maybe—it’ll make RTX On playable without melting your rig.

- We could even see AI-generated assets and dynamic resolution scaling powered by Transformers on steroids.

Final Thoughts

DLSS started as a neat trick and turned into the backbone of modern PC gaming. With DLSS 4 and the power of Transformers, it’s clear NVIDIA isn’t just faking frames—they’re redefining how games are rendered.

So whether you’re a pixel snob, a frame rate junkie, or someone clinging to their 30-series card like it’s the last chopper out of Saigon—DLSS has something for you.

And who knows? Maybe one day it really will turn back time. (I’ll start saving for DLSS 6 - Everyone hold onto your kidneys😁, I’m coming for them.)