Appearance

Anthropic's Model Context Protocol (MCP): A New Standard for AI Integration and Autonomy

by Pranav Hemanth, on 23/03/2025

Before we understand what MCP is, let's first dive into the history of LLM development and capabilities of LLMs overtime.

When GPTs came out, they could be thought of as a smart person you could converse with and who would help you mainly by providing correct information about a question. I like to think of this stage of GPTs as a database with a store of knowledge spanning all of the internet. You would query this database with a question and it could return to you the most apt answer.

One important thing to note here is that the said database here was static and still largely remains so. An LLM would need to be trained on some data initially after which based on the trained data you could query it (ask questions) and it could answer you.

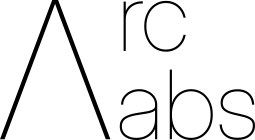

A simple mental image of this would be how Andrej Karpathy created an analogy of this. Take the LLM to be a zip file with all the knowledge of the internet (assuming the whole internet to be the train dataset) on it till a certain point. You can ask this file anything till that point and it would answer.

Source: Andrej Karpathy

Source: Andrej Karpathy

The Challenge of Dynamic Knowledge

But we can quickly see our first problem here. Knowledge is not static. Everyday millions of articles, billions of mails and zillions (i don't know if this is a word XD) of words are written on the internet. This would lead to an impossible scaling problem where knowledge would always be obsolete by the time an LLM was trained. This may not be a problem for things like facts or established knowledge, but what about stocks and prices, or current affairs?

LLM developers sought to tackle this challenge in a couple ways. A simple method was to send web crawlers onto the internet and get fresh data to retrain models often, this is however not feasible or scalable due to the high compute required in training LLMs. There must be better ways-

Enter Large Action Models (LAMs)

A Large Action Model is simply an LLM which can perform actions as an 'Agent'. This kind of a model was capable of searching the internet, for instance, independently to parse for new information and 'learn' from it.

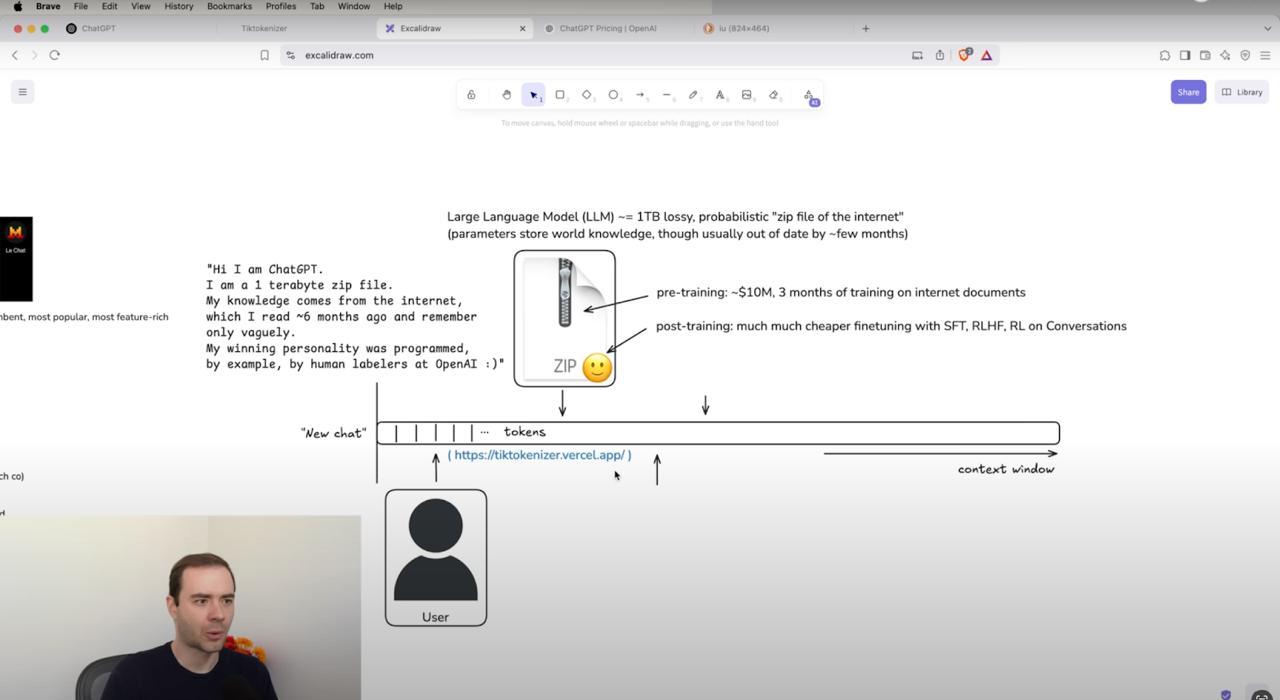

We can see this development of LLMs through the illustration below:

Source: Dr. Ras Mic

Source: Dr. Ras Mic

When GPT was launched it had only the query-response capabilities as discussed above.

Evolving from that with integration of simple tools like Search, Canvas, Calculator, etc LLMs were better able to serve queries with higher precision and lesser resources.

This evolution came largely through developers using existing APIs and frameworks to tie this new LLM ecosystem into the existing services available on the web. A couple well built examples of this would be Cursor, Bolt and ChatGPT tools such as Search, Canvas, Projects, Reasoning, DeepResearch, Custom GPTs etc.

Manus, the AI Agent in news built by the Chinese company Monica is a good example of a meticulously built Agent with a vast toolchain to extend the capabilities of LLMs today.

We are currently in this phase of LLM + Toolchains where companies are building better and more meticulous products where LLMs are able to act independently as agents to achieve a certain task. Claude, Cursor and Warp are some excellent projects which have shown extensive capabilities utilizing toolchaining.

However we are reaching the ceiling for API integration in LLMs due to a glaring problem that websites had to adapt to during the inception of APIs. APIs are not static. A change in a single API service can deem complete microservices and workflows run by LAMs unusable.

The Shift to MCP

The shift we are going through now is the evolution from simple Toolchaining to MCP.

Anthropic AI, the company behind Claude, released a new standard called the Model Context Protocol (MCP) on 25th November 2024. This new protocol aims to act as the 'Translator' between different services on the internet and aims to standardise API development in conjunction with LLMs. The MCP is an open standard designed to facilitate secure, two-way connections between AI models and external data sources. By standardizing how applications provide context to large language models (LLMs), MCP functions similarly to a "USB-C port" for AI applications, enabling seamless integration and communication across diverse platforms.

What is MCP?

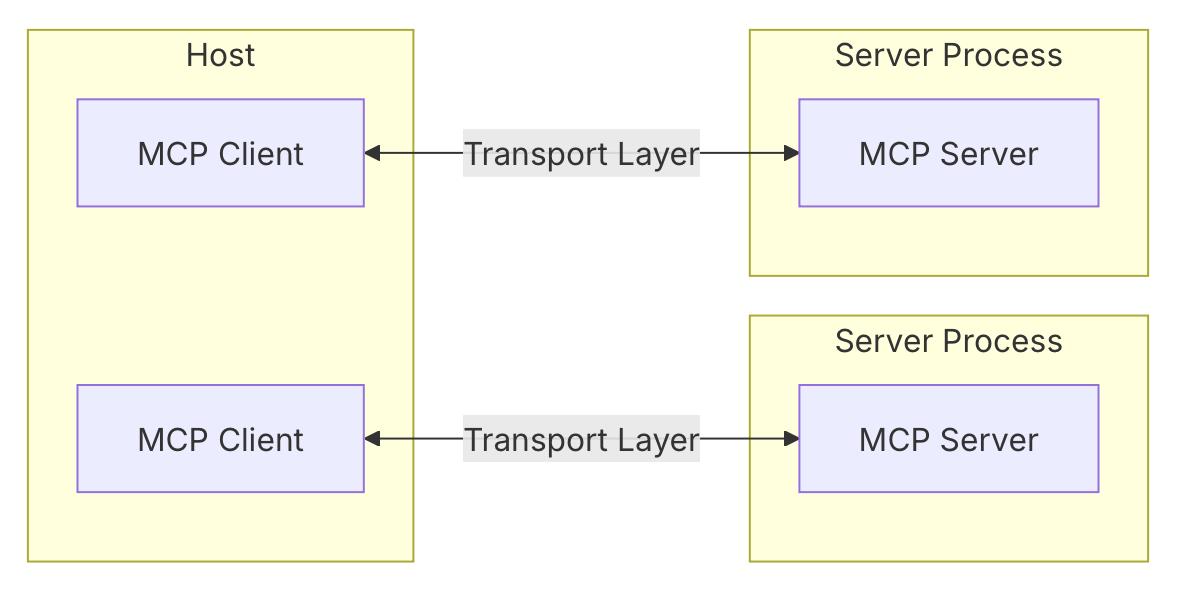

The protocol prescribes a framework of MCP servers and clients:

- MCP Servers: These servers expose data sources, allowing AI applications to access and interact with the data securely.

- MCP Clients: AI applications that connect to MCP servers to retrieve or manipulate data, ensuring a standardized method of communication.

This architecture promotes interoperability, enabling developers to integrate once and connect seamlessly to multiple data sources, thereby reducing the need for custom coding for each dataset. The framework aims to eliminate need for custom integrations and make tool integrations to LLMs plug and play.

Current integration examples for proof of concept are led by Claude by AnthropicAI. Claude AI utilizes MCP to connect with knowledge bases, perform code execution, and interact with tools, making it more effective in responding to complex queries.

Implications of MCP

The further development of MCP is seen by many developers as essential for AI Interoperability. As AI ecosystems expand, the need for standardization in AI-to-tool communication is growing. MCP offers a structured approach to achieving this interoperability, leading to several important implications:

- Standardization in AI Ecosystems – MCP can help unify how different AI models interact with software, reducing compatibility issues and promoting seamless AI deployment.

- Industry Adoption and Improvements – Companies are likely to integrate MCP into AI-powered applications, improving automation, personalization, and decision-making capabilities.

- Expanding AI Applications – Beyond chatbots and virtual assistants, MCP could be applied to robotics, IoT devices, and enterprise AI solutions, enabling more advanced, autonomous systems.

Technical Architecture of MCP

Disclaimer: This part of the article may require you to understand a client-server architecture in context of how network protocols work. Feel free to skip to the end if you wish for a qualitative understanding of MCP over implementation methodologies.

The Model Context Protocol (MCP) is designed with a flexible and extensible architecture, facilitating smooth communication between LLM applications and their integrations. This document outlines the fundamental architectural components and key concepts.

MCP follows a client-server architecture where:

- Hosts are LLM applications (like Claude Desktop or IDEs) that initiate connections

- Clients maintain 1:1 connections with servers, inside the host application

- Servers provide context, tools, and prompts to clients

Source: modelcontextprotocol.io

Source: modelcontextprotocol.io

Core Components

Protocol Layer

- Handles message structuring, request-response mapping, and core communication protocols.

- Key components: Protocol, Client, Server.

Transport Layer

- Manages communication between clients and servers through different transport mechanisms:

- Stdio Transport: Facilitates local communication via standard input/output.

- HTTP with Server-Sent Events (SSE) Transport: Uses SSE for server-to-client communication and HTTP POST for client-to-server interactions.

- All transports rely on JSON-RPC 2.0 for structured message exchanges.

- Manages communication between clients and servers through different transport mechanisms:

Message Types

- Requests: Expect responses from the recipient.

- Results: Indicate successful responses.

- Errors: Signify failures in processing requests.

- Notifications: One-way messages that do not require a response.

Connection Lifecycle

Initialization

- The client initiates an initialize request specifying the protocol version and capabilities.

- The server replies with its version and capabilities.

- The client finalizes the handshake with an initialized notification.

Message Exchange

- Supports both request-response patterns and one-way notifications.

Termination

- Either the client or server can terminate the connection through a clean shutdown, transport disconnection, or due to an error condition.

Error Handling

- Standardized error codes include ParseError, InvalidRequest, MethodNotFound, InvalidParams, and InternalError.

- Errors are handled through:

- Response messages containing error details.

- Transport-level error handling mechanisms.

- Dedicated protocol-level error handlers.

Implementation and Best Practices

Implementation Example

A simple MCP server in TypeScript follows a structured approach:

- Instantiate a Server.

- Define request handlers.

- Connect via the chosen transport mechanism.

Best Practices

Transport Selection

- Use stdio transport for local AI models.

- Use HTTP with SSE for web-based applications, ensuring secure handling.

Message Handling

- Validate all incoming data.

- Implement error handling and timeouts.

- Use progress tokens for long-running processes, providing incremental updates.

Security Considerations

Transport Security

- Secure remote connections using TLS.

- Implement authentication and validate request origins.

Message Validation

- Sanitize input and enforce proper JSON-RPC formatting.

Resource Protection

- Use access controls to regulate API interactions.

- Monitor resource usage to prevent abuse.

Error Handling

- Avoid exposing sensitive error information.

- Log security-relevant errors for auditing and debugging.

Debugging and Monitoring

Logging

- Track requests and responses to diagnose issues.

- Monitor system performance and error rates.

Diagnostics

- Implement periodic health checks.

- Continuously monitor connection states.

Testing

- Simulate edge cases and unexpected errors.

- Perform stress tests to measure system resilience.

Conclusion

MCP marks a significant milestone in AI integration, enhancing the autonomy and intelligence of AI models. By enabling seamless real-time interactions with external tools and data sources, MCP fosters smarter, more efficient AI applications. As AI ecosystems continue evolving, MCP's role in standardizing and expanding AI-tool communication will be indispensable.